jinni

Bring your project into LLM context - tool and MCP server

1

Github Watches

8

Github Forks

173

Github Stars

Jinni: Bring Your Project Into Context

Jinni is a tool to efficiently provide Large Language Models the context of your projects. It gives a consolidated view of relevant project files complete with metadata, overcoming the limitations and inefficiencies of reading files one by one.

The philosophy behind this tool is that LLM context windows are large, models are smart, and directly seeing your project best equips the model to help with anything you throw at it.

There is an MCP (Model Context Protocol) server for integration with AI tools and a command-line utility (CLI) for manual use that copies project context to the clipboard ready to paste wherever you need it.

These tools are opinionated about what counts as relevant project context to best work out of the box in most use cases, automatically excluding:

* Binary files

* Dotfiles and hidden directories

* Common naming conventions for logs, build directories, tempfiles, etc

Inclusions/exclusions are customizable with complete granularity if required using .contextfiles - this works like .gitignore except defining inclusions.

The MCP server can provide as much or as little of the project as desired. By default the scope is the whole project, but the model can ask for specific modules / matching patterns / etc.

MCP Quickstart

MCP server config for Cursor / Roo / Claude Desktop / client of choice:

{

"mcpServers": {

"jinni": {

"command": "uvx jinni-server"

// Optionally constrain the server to only read within a tree (recommended for security):

// "command": "uvx jinni-server --root /absolute/path/"

}

}

}

Install uv if it is not on your system: https://docs.astral.sh/uv/getting-started/installation/

Reload your IDE and you can now ask the agent to read in context.

If you want to restrict this to particular modules / paths just ask - e.g. "Read context for tests".

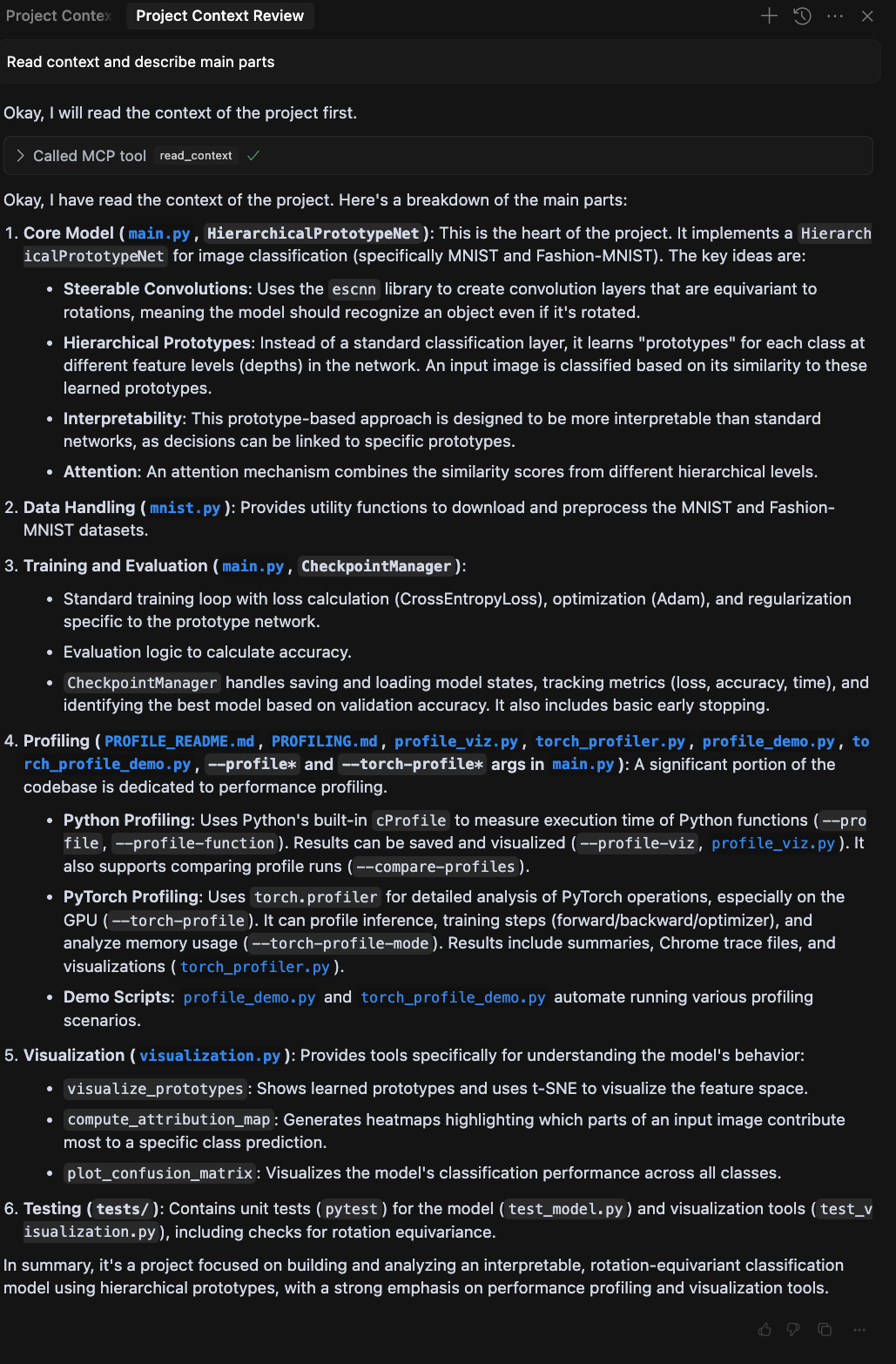

In action with Cursor:

Note For Cursor Users

Cursor can silently drop context that is larger than the allowed maximum, so if you have a sizable project and the agent acts like the tool call never happened, try reducing what you are bringing in ("read context for xyz")

Components

-

jinniMCP Server:- Integrates with MCP clients like Cursor, Cline, Roo, Claude Desktop, etc.

- Exposes a

read_contexttool that returns a concatenated string of relevant file contents from a specified project directory.

-

jinniCLI:- A command-line tool for manually generating the project context dump.

- Useful for feeding context to LLMs via copy-paste or file input. Or pipe the output wherever you need it.

Features

- Efficient Context Gathering: Reads and concatenates relevant project files in one operation.

-

Intelligent Filtering (Gitignore-Style Inclusion):

- Uses a system based on

.gitignoresyntax (pathspeclibrary'sgitwildmatch). - Supports hierarchical configuration using

.contextfilesplaced within your project directories. Rules are applied dynamically based on the file/directory being processed. -

Matching Behavior: Patterns match against the path relative to the target directory being processed (or the project root if no specific target is given). For example, if targeting

src/, a rule!app.pyinsrc/.contextfileswill matchapp.py. Output paths remain relative to the original project root. -

Overrides: Supports

--overrides(CLI) orrules(MCP) to use a specific set of rules exclusively. When overrides are active, both built-in default rules and any.contextfilesare ignored. Path matching for overrides is still relative to the target directory. - Explicit Target Inclusion: Files explicitly provided as targets are always included (bypassing rule checks, but not binary/size checks). Directories explicitly provided as targets are always entered, and rule discovery/matching then proceeds relative to that target directory.

- Uses a system based on

-

Customizable Configuration (

.contextfiles/ Overrides):- Define precisely which files/directories to include or exclude using

.gitignore-style patterns applied to the relative path. - Patterns starting with

!negate the match (an exclusion pattern). (See Configuration section below).

- Define precisely which files/directories to include or exclude using

-

Large Context Handling: Aborts with a

DetailedContextSizeErrorif the total size of included files exceeds a configurable limit (default: 100MB). The error message includes a list of the 10 largest files contributing to the size, helping you identify candidates for exclusion. See the Troubleshooting section for guidance on managing context size. -

Metadata Headers: Output includes file path, size, and modification time for each included file (can be disabled with

list_only). - Encoding Handling: Attempts multiple common text encodings (UTF-8, Latin-1, etc.).

- List Only Mode: Option to only list the relative paths of files that would be included, without their content.

Usage

MCP Server (read_context tool)

-

Setup: Configure your MCP client (e.g., Claude Desktop's

claude_desktop_config.json) to run thejinniserver viauvx. -

Invocation: When interacting with your LLM via the MCP client, the model can invoke the

read_contexttool.-

project_root(string, required): The absolute path to the project root directory. Rule discovery and output paths are relative to this root. -

targets(JSON array of strings, required): Specifies a mandatory list of file(s)/directory/ies withinproject_rootto process. Must be a JSON array of string paths (e.g.,["path/to/file1", "path/to/dir2"]). Paths can be absolute or relative to CWD. All target paths must resolve to locations insideproject_root. If an empty list[]is provided, the entireproject_rootis processed. -

rules(JSON array of strings, required): A mandatory list of inline filtering rules (using.gitignore-style syntax, e.g.,["src/**/*.py", "!*.tmp"]). Provide an empty list[]if no specific rules are needed (this will use built-in defaults). If non-empty, these rules are used exclusively, ignoring built-in defaults and.contextfiles. -

list_only(boolean, optional): If true, returns only the list of relative file paths instead of content. -

size_limit_mb(integer, optional): Override the context size limit in MB. -

debug_explain(boolean, optional): Enable debug logging on the server.

-

Output: The tool returns a single string containing the concatenated content (with headers) or the file list. Paths in headers/lists are relative to the provided

project_root. In case of a context size error, it returns aDetailedContextSizeErrorwith details about the largest files.

-

MCP Server (usage tool)

-

Invocation: The model can invoke the

usagetool (no arguments needed). -

Output: Returns the content of the

README.mdfile as a string.

(Detailed server setup instructions will vary depending on your MCP client. Generally, you need to configure the client to execute the Jinni server.)

Running the Server:

-

Recommended Method: Use

uvxto run the server entry point directly (requires thejinnipackage to be published on PyPI or findable byuvx):

Example MCP client configuration (e.g.,uvx jinni-server [OPTIONS]claude_desktop_config.json):{ "mcpServers": { "jinni": { "command": "uvx jinni-server" // Optionally constrain the server to only read within a tree (recommended for security): // "command": "uvx jinni-server --root /absolute/path/" } } }

Consult your specific MCP client's documentation for precise setup steps. Ensure uv (for uvx) or the correct Python environment (for python -m) is accessible. The usage tool corresponds to the jinni usage CLI command.

Command-Line Utility (jinni CLI)

jinni [OPTIONS] [<PATH...>]

-

<PATH...>(optional): One or more paths to the project directories or files to analyze. Defaults to the current directory (.) if none are provided. -

-r <DIR>/--root <DIR>(optional): Specify the project root directory. If provided, rule discovery starts here, and output paths are relative to this directory. If omitted, the root is inferred from the common ancestor of the<PATH...>arguments (or CWD if only '.' is processed). -

--output <FILE>/-o <FILE>(optional): Write the output to<FILE>instead of printing to standard output. -

--list-only/-l(optional): Only list the relative paths of files that would be included. -

--overrides <FILE>(optional): Use rules from<FILE>instead of discovering.contextfiles. -

--size-limit-mb <MB>/-s <MB>(optional): Override the maximum context size in MB. -

--debug-explain(optional): Print detailed inclusion/exclusion reasons to stderr andjinni_debug.log. -

--root <DIR>/-r <DIR>(optional): See above. -

--no-copy(optional): Prevent automatically copying the output content to the system clipboard when printing to standard output (the default is to copy).

Installation

You can install Jinni using pip or uv:

Using pip:

pip install jinni

Using uv:

uv pip install jinni

This will make the jinni CLI command available in your environment. See the "Running the Server" section above for how to start the MCP server depending on your installation method.

Examples

-

Dump context of

my_project/to the console:jinni ./my_project/ # Process a single directory jinni ./src ./docs/README.md # Process multiple targets jinni # Process current directory (.) -

List files that would be included in

my_project/without content:jinni -l ./my_project/ jinni --list-only ./src ./docs/README.md -

Dump context of

my_project/to a file namedcontext_dump.txt:jinni -o context_dump.txt ./my_project/ -

Use override rules from

custom.rulesinstead of.contextfiles:jinni --overrides custom.rules ./my_project/ -

Show debug information:

jinni --debug-explain ./src -

Dump context (output is automatically copied to clipboard by default):

jinni ./my_project/ -

Dump context but do not copy to clipboard:

jinni --no-copy ./my_project/

Configuration (.contextfiles & Overrides)

Jinni uses .contextfiles (or an override file) to determine which files and directories to include or exclude, based on .gitignore-style patterns.

- Core Principle: Rules are applied dynamically during traversal, relative to the current target directory being processed.

-

Location (

.contextfiles): Place.contextfilesin any directory. When processing a directory (either the initial target or a subdirectory), Jinni looks for.contextfilesstarting from that directory downwards. Rules from parent directories outside the current target directory are ignored when processing inside that target. - Format: Plain text, UTF-8 encoded, one pattern per line.

-

Syntax: Uses standard

.gitignorepattern syntax (specificallypathspec'sgitwildmatchimplementation).-

Comments: Lines starting with

#are ignored. -

Inclusion Patterns: Specify files/directories to include (e.g.,

src/**/*.py,*.md,/config.yaml). -

Exclusion Patterns: Lines starting with

!indicate that a matching file should be excluded (negates the pattern). -

Anchoring: A leading

/anchors the pattern to the directory containing the.contextfiles. -

Directory Matching: A trailing

/matches directories only. -

Wildcards:

*,**,?work as in.gitignore.

-

Comments: Lines starting with

-

Rule Application Logic:

- Determine Target: Jinni identifies the target directory (either explicitly provided or the project root).

-

Override Check: If

--overrides(CLI) orrules(MCP) are provided, these rules are used exclusively. All.contextfilesand built-in defaults are ignored. Path matching is relative to the target directory. -

Dynamic Context Rules (No Overrides): When processing a file or subdirectory within the target directory:

- Jinni finds all

.contextfilesstarting from the target directory down to the current item's directory. - It combines the rules from these discovered

.contextfileswith the built-in default rules. - It compiles these combined rules into a specification (

PathSpec). - It matches the current file/subdirectory path, calculated relative to the target directory, against this specification.

- Jinni finds all

-

Matching: The last pattern in the combined rule set that matches the item's relative path determines its fate.

!negates the match. If no user-defined pattern matches, the item is included unless it matches a built-in default exclusion (like!.*). -

Target Handling: Explicitly targeted files bypass rule checks. Explicitly targeted directories become the root for rule discovery and matching for their contents. Output paths always remain relative to the original

project_root.

Examples (.contextfiles)

Example 1: Include Python Source and Root Config

Located at my_project/.contextfiles:

# Include all Python files in the src directory and subdirectories

src/**/*.py

# Include the main config file at the root of the project

/config.json

# Include all markdown files anywhere

*.md

# Exclude any test data directories found anywhere

!**/test_data/

Example 2: Overriding in a Subdirectory

Located at my_project/src/.contextfiles:

# In addition to rules inherited from parent .contextfiles...

# Include specific utility scripts in this directory

utils/*.sh

# Exclude a specific generated file within src, even if *.py is included elsewhere

!generated_parser.py

Development

-

Design Details: DESIGN.md

-

Running Server Locally: During development (after installing with

uv pip install -e .or similar), you can run the server module directly:python -m jinni.server [OPTIONS]Example MCP client configuration for local development:

{ "mcpServers": { "jinni": { // Adjust python path if needed, or ensure the correct environment is active "command": "python -m jinni.server" // Optionally constrain the server to only read within a tree (recommended for security): // "command": "python -m jinni.server --root /absolute/path/to/repo" } } }

Troubleshooting

Context Size Errors (DetailedContextSizeError)

If you encounter an error indicating the context size limit was exceeded, Jinni will provide a list of the 10 largest files it attempted to include. This helps you identify potential candidates for exclusion.

To resolve this:

- Review the Largest Files: Check the list provided in the error message. Are there large files (e.g., data files, logs, build artifacts, media) that shouldn't be part of the LLM's context?

-

Configure Exclusions: Use

.contextfilesor the--overrides/rulesoptions to exclude unnecessary files or directories.-

Example (

.contextfiles): To exclude all.logfiles and a specific large data directory:# Exclude all log files !*.log # Exclude a large data directory !large_data_files/ - Refer to the Configuration section above for detailed syntax and usage.

-

Example (

-

Increase the Limit (Use with Caution): If all included files are genuinely necessary, you can increase the size limit using

--size-limit-mb(CLI) orsize_limit_mb(MCP). Be mindful of LLM context window limits and processing costs. -

Use

jinni usage/usage: If you need to refer back to these instructions or the configuration details while troubleshooting, use thejinni usagecommand or theusageMCP tool.

相关推荐

I find academic articles and books for research and literature reviews.

Confidential guide on numerology and astrology, based of GG33 Public information

Converts Figma frames into front-end code for various mobile frameworks.

Advanced software engineer GPT that excels through nailing the basics.

Take an adjectivised noun, and create images making it progressively more adjective!

Embark on a thrilling diplomatic quest across a galaxy on the brink of war. Navigate complex politics and alien cultures to forge peace and avert catastrophe in this immersive interstellar adventure.

Discover the most comprehensive and up-to-date collection of MCP servers in the market. This repository serves as a centralized hub, offering an extensive catalog of open-source and proprietary MCP servers, complete with features, documentation links, and contributors.

Micropython I2C-based manipulation of the MCP series GPIO expander, derived from Adafruit_MCP230xx

The all-in-one Desktop & Docker AI application with built-in RAG, AI agents, No-code agent builder, MCP compatibility, and more.

Bridge between Ollama and MCP servers, enabling local LLMs to use Model Context Protocol tools

Fair-code workflow automation platform with native AI capabilities. Combine visual building with custom code, self-host or cloud, 400+ integrations.

🧑🚀 全世界最好的LLM资料总结(Agent框架、辅助编程、数据处理、模型训练、模型推理、o1 模型、MCP、小语言模型、视觉语言模型) | Summary of the world's best LLM resources.

Reviews

user_i576mx5O

As a loyal MCP application user, I've been highly impressed by jinni from smat-dev. The seamless integration and user-friendly interface make it a standout tool. Plus, it's open-source, which has allowed me to customize it to fit my needs perfectly. The documentation and support from the community have been outstanding. Highly recommend!